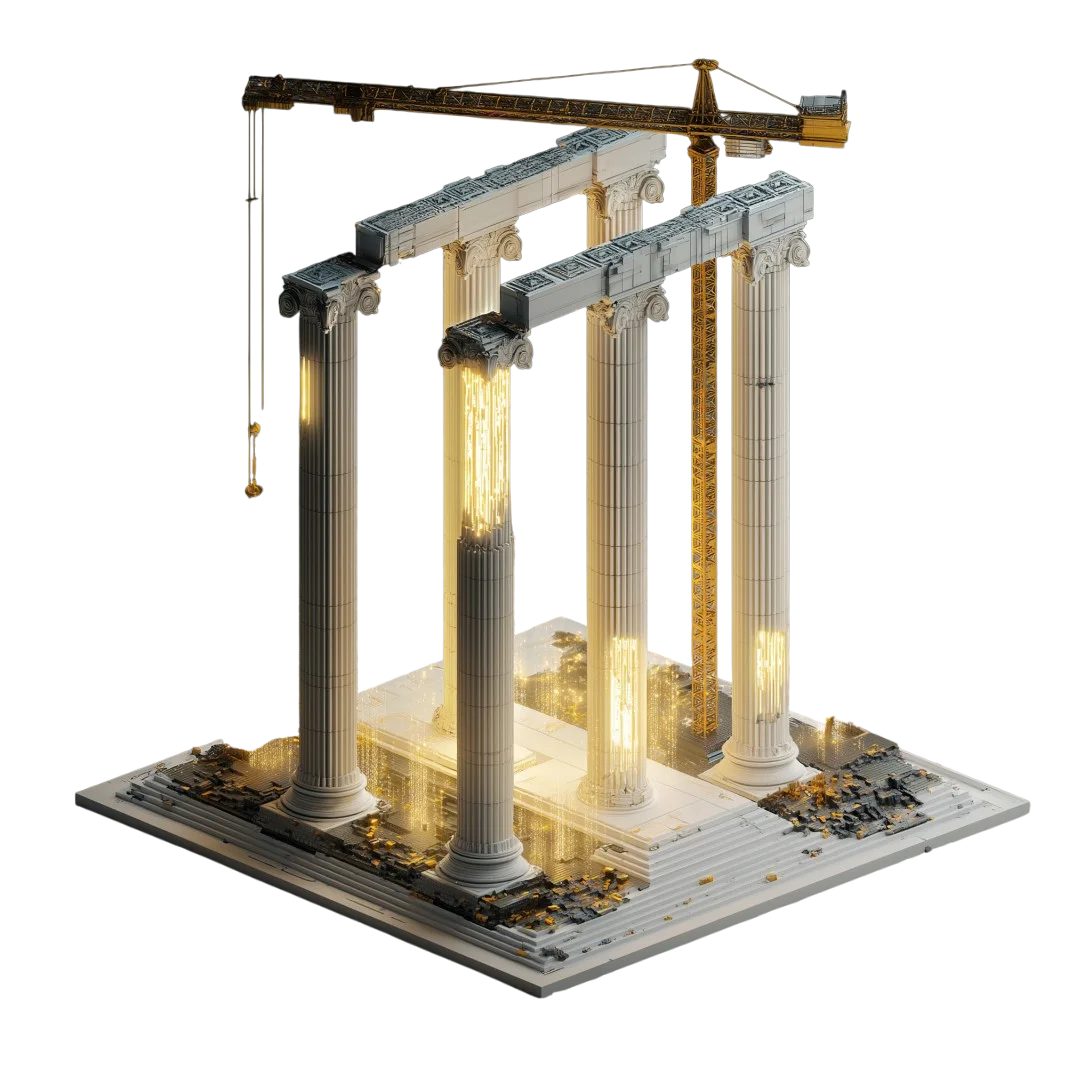

". . Models learn. Teams make them stand . ."

I spend my days between Hiring Managers, Architects, and Delivery Teams . . and the pattern is clear.

The moment an organisation ships its first ML feature, the hiring brief changes. It’s no longer, “Can they design microservices?” It becomes, “Can they design learning systems that don’t silently rot?”

Here’s how I advise Clients (and Candidates) when machine learning starts to reshape the architecture game.

What’s actually changed (and why hiring must follow)

Classic systems are deterministic - Rules in, outputs out.

AI systems are probabilistic - Data shifts, models drift, confidence varies. That means your architecture needs:

- Data as a product (owned, versioned, quality-checked)

- Model lifecycle as a first-class concern (train > evaluate > deploy > monitor > retrain)

- Operational guardrails (drift, bias, cost, and rollback paths)

- Human-in-the-loop points for low confidence or high risk

If your Team can’t speak those 4 fluently, your shiny model will age fast.

Role archetypes I’m hiring for (and when to bring them in)

- AI Platform / MLOps Architect

Stitches training and serving together. Feature store parity, model registry, CI/CD for models, canaries/AB, observability. - Data Product / Feature Architecture Lead

Treats features like reusable products with SLAs, lineage, and point-in-time correctness. Prevents ‘notebook drift’. - Solution Architect (GenAI / Retrieval)

Patterns for grounding, vector stores, prompt/version tracking, guardrails, latency/cost trade-offs. - AI Risk and Security Architect

Privacy, model governance, red-teaming, supply-chain risk, auditability. Makes regulators (and customers) sleep at night. - Domain Architect with ML fluency

Knows the business levers and where prediction actually changes outcomes.

When: start with a fractional AI Platform/MLOps Architect to set the runway.

Add Solution/Domain depth as use-cases prove value.

What ‘great’ Candidates show (portfolio signals I look for)

- A real model lifecycle. Shadow > canary > rollback stories, not just notebooks.

- Feature store parity (offline/online) and point-in-time correctness.

- Drift and performance dashboards by cohort, with thresholds tied to automated actions.

- Clear human-in-the-loop design (when to escalate, how to learn from feedback).

- Cost-aware inference choices (distillation, caching, batch vs. real time).

- One painful incident they owned (and the architectural fix that prevented the sequel).

If a CV can’t evidence these, we’re hiring hope.

Interview blueprint (questions that separate good from great)

1. “Describe a time model performance decayed in prod. How did you detect it and what changed in the architecture?”

Look for drift detection, rollback, retraining cadence, owner/SLA.

2. “How do you keep offline training features consistent with online inference?”

Expect feature store, point-in-time joins, reproducibility.

3. “Where does a human step in, and how does their feedback improve the system?”

Escalation criteria, feedback routing, label pipelines.

4. “Show me your approach to explain ability and risk.”

Model cards, reason codes, cohort analysis, governance rhythm.

5. “Trade-off a 3% accuracy gain vs. doubling latency/cost.”

Business framing, SLOs, tiered models, cache/approximation.

Red flags we advise Clients to avoid

- “We productionised the notebook.” (No.)

- One big “accuracy” number with no cohort split.

- Refactors bundled into feature PRs (review chaos).

- No owner for data quality.

- Monitoring that no one acts on.

How we structure a hiring plan (light weight, outcome-first)

- Calibrate on one use-case. Success metric, SLOs, risk boundaries.

- Fractional runway. 6 > 8 weeks of an AI Platform/ML Ops Architect to set the plumbing.

- Prove value. Shadow > canary > AB on a single journey.

- Scale. Hire the Solution/Domain Architect that fits the winning pattern; add Data/Feature ownership.

This beats “hire 3 Seniors and hope.”

A sharper job brief (Hiring Managers - steal this)

Role: AI Platform / ML Ops Architect

Outcome: Ship a learning system that can be safely updated.

You will:

– Stand up feature store parity (offline/online) and model registry

– Design canary/rollback, drift monitoring, and human-in-the-loop

– Define SLOs for latency, cost, and model performance by cohort

– Build the pipeline for repeatable train/eval/deploy

You’ve done: A production rollout where you owned the path from shadow to stable, and fixed the first nasty incident without heroics.

AI doesn’t just change what we build - it changes how we staff and what we expect from architecture.

If you’re a Client - Design your hiring around learning systems, not frameworks.

If you’re a Candidate - Bring proof you can make models behave after the launch party.

If you want a hand (shaping the brief, building the scorecard, or finding the people who’ve actually done this in anger) . . that’s our lane!!